When I first heard about the automatic tagging of the Google Photos service I was completely unimpressed, as it is with most great ideas, the genius and tangible work at its core was not immediately obvious to me. However, after just a few days in the hands of the public it became universally accepted as the way we should be tagging and curating our growing photo collections into the future.

From a computer science/engineering perspective the accuracy of image recognition has been developing rapidly for some years. It was as many as four years ago that I was using (and actually teaching) Live Photo to automatically recognize and tag members of my family. The algorithms in use even back then was able to distinguish between my triplet nieces, it could be taught to detect photos of relatives from a really wide range of years.

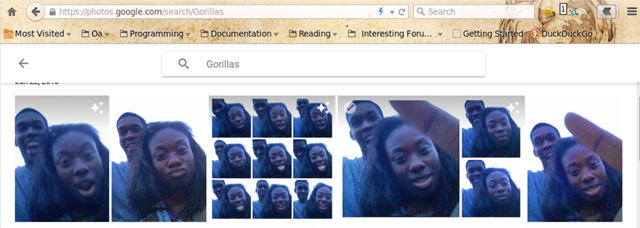

So I was really shocked when a week ago we were accosted with this controversy related to Google's Photos mistakenly labeling black people as “gorillas”.

This is quite obviously horrible! Here are, what sounds like, the genuine and contrite responses from Google:

“We’re appalled and genuinely sorry that this happened,” said a Google representative in an emailed statement. “We are taking immediate action to prevent this type of result from appearing.”

And here…

Google executive Yonatan Zunger says, "[It was] high on my list of bugs you 'never' want to see happen," and that the company is "working on longer-term fixes around both linguistics -- words to be careful about in photos of people -- and image recognition itself -- e.g., better recognition of dark-skinned faces."

Before we hastily relegate this to poor programming and testing I think it is important to note that our history is littered with genuine scientific discovery which has surreptitiously (and sometimes blatantly) embedded within itself assumptions that renders certain members of the human population invisible or in some cases label them as less than human. I personally have harbored notions that science (in this case computer science) exists isolated from prejudice by assuming it looks at factual, measurable, and objective realities.

I am concerned that our industry has followed the lead of other scientific traditions and will blunder arrogantly and ignorantly into defining its own space as "neutral". History has shown us that our sciences have always been influenced positively and negatively by the culture that it springs from. Now with semi-intelligent software making more decisions on tasks we normally perform I believe it is reasonable to ask what assumptions and predispositions will be embedded in it.

In Google's case we have software that made narrow suppositions about race and phenotypes (skin tone, hair type, lips, etc.). Sampling pictures is pretty harmless but what about other software that is making much more important decisions? Take for example HunchLab.

HunchLab is a web-based predictive policing system. Advanced statistical models automatically include concepts such as aoristic temporal analysis, seasonality, risk terrain modeling, near repeats, and collective efficacy to best forecast when and where crimes are likely to emerge. This all lets you focus on one thing: responding.

Predictive policing? To be clear I can make no assumptions about the motivations of HunchLab team, but as this solution gets rolled out in into metropolitan areas across the country we should be asking who vetted the data this analysis is based on. As federal investigations uncovered issues with police practices in multiple cities, how can we trust the data upon which this analysis rests.

What do we do?

The most obvious and broadest solution for our field is a push for greater diversity and inclusion, unfortunately people of color are already, for a variety of reasons, behind in almost every socio-economic measurement.

At work

Now I have had these discussions with well-meaning colleagues for years and it usually takes on this pattern:

Me: We need to find ways to promote diversity in our team.

Colleague: I only want to hire and work with the best people.

Me: Our statements are not mutually exclusive.

I am glad to see big company leaders have taken the first step of actually looking openly and honestly at their own hiring habits, the biggest firms are publishing diversity reports for everyone to see, I would love to see more companies do this. Please have a look at the following reports:

Pretty bad! One of the programs I thought most interesting involved the use of training courses designed to identify and manage our internal bias. The truth is though that this problem does not begin with these giant companies, it is simply where it manifests itself the most.

In our schools

Programs like CS First provide free programs that increases student access and exposure to computer science (CS) education through after-school, and summer programs. Clubs are established and organized by teachers and/or community volunteers.

To transform the demographics of the tech industry to more accurately reflect our society, we also need more minorities and women studying computer science and engineering at our colleges. Today, for example, women account for about 18% of computer science (CS) degrees, which is about half of what it was 20 years ago.

In our communities

Programs like Black Girls Code embody everything I want to see and inspire in underserved communities across the country, here is a little about what they do:

Black Girls CODE is devoted to showing the world that black girls can code, and do so much more. By reaching out to the community through workshops and after school programs, Black Girls CODE introduces computer coding lessons to young girls from underrepresented communities in programming languages such as Scratch or Ruby on Rails.

And though we at Black Girls CODE cannot overstate our happiness with the results of our classes, this is just the first step in seeking to bridge the digital divide. The digital divide, or the gap between those with regular, effective access to digital technology and those without, is becoming an increasingly critical problem in society. As more and more information becomes electronic, the inability to get online can leave entire communities at an extremely dangerous disadvantage.

Sadly, San Francisco’s digital divide falls along the same racial and social fault lines that characterize so many of society’s issues. White households are twice as likely to have home Internet access as African American houses. Bayview Hunters Point, Crocker Amazon, Chinatown, Visitacion Valley, and the Tenderloin have significantly lower rates of home technology use than the rest of the city. Sixty-six percent of Latinos report having a home computer, as opposed to 88 percent of Caucasians.

There is one constant inhibitor to successful diversity and inclusion that appears to cross all demographic boundaries, poverty. Defeating poverty is not just an act of charity but a moral imperative of any healthy society.

Comments are closed.