Microsoft Research (along with the Media Interaction Lab & the Institute of Surface Technologies and Photonics) have been working on a new type of Human-Computer Interaction project known as FlexSense.

FlexSense

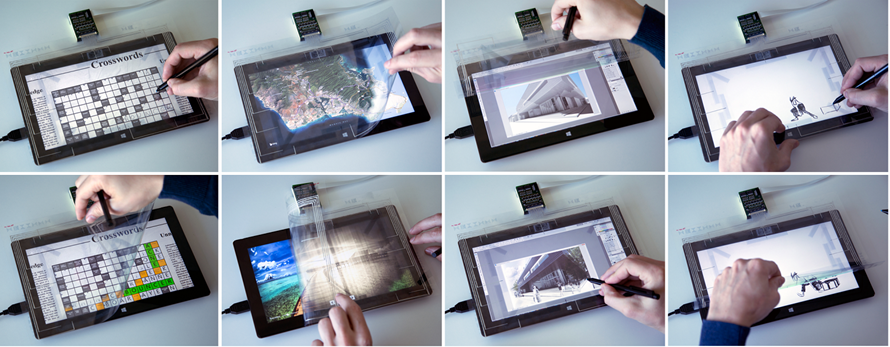

FlexSense is a transparent, thin-film, sensing surface based on printed piezoelectric sensors. These basic sensors are laid out on an flexible sheet and provide enough feedback to eliminate the need for additional camera sensors (like Kinect or Leap Motion). A a set of algorithms interpret the sensor input as you flex and twist the the sheet (like a piece of paper) and send signals to your device for subsequent interaction with software applications and games. FlexSense can be used for a variety of 2.5D interactions:

- Transparent cover for tablets where bending can be performed alongside touch to enable magic lens style effects.

- Layered input.

- Mode switching.

- High degree-of-freedom input controller for gaming and beyond.

Piezo Primer

Deformations of a piezo element can causes a change in the surface charge density of the material, resulting in a charge appearing between the electrodes. The amplitude and frequency of the signal is directly proportional to the applied mechanical stress. Since piezoelectricity reacts to mechanical stress, continuous bending would give this kind of application the signals need to define twisting. Thus several strategically placed sensors are combined to provide a reconstruction of the real-world surface and all the permutations of deformations (A4 form factor is used to match common tablets).

A tale of two algorithms

The mapping can only be accurately performed by combining two data-driven algorithms. Both algorithms require training based on sensor measurements and ground truth 3D shape measurements. This training phase enables both algorithms to infer the shape of the foil from the sensor data by using a custom-built multi-camera rig to trace the 3D position of the markers with high accuracy.

The first algorithm is based on linear interpolation, which is the simplest method of retrieving and defining the trajectory between two data points. In this method the points are simply joined by straight line segments. Each point would then be represented by a sensor (or some combination of sensors). This method clearly has some weakness, as it results in discontinuities at each point. Often a smoothing function is applied, examples of these include (Cosine, Cubic and Hermite). Even with these smoothing functions mapping the final real world construction is apparently fraught with inaccuracies and so another algorithm was employed, learning based continuous regression.

Learning based continuous regression, in this instance, is the practice of displacing the surface and taking measurements over time. This approach allows modeling of the relationship between two variables (sensor measures, surface geometry) where the goal is to provide a function that aids in prediction.

I watch this and the geek in me is excited about the future of human computer interactions! The full FlexSense publication can be found here.

Comments are closed.